GenManip: LLM-driven Simulation for Generalizable Instruction-Following Manipulation

Equal Contribution Project Leader Corresponding Author

Abstract

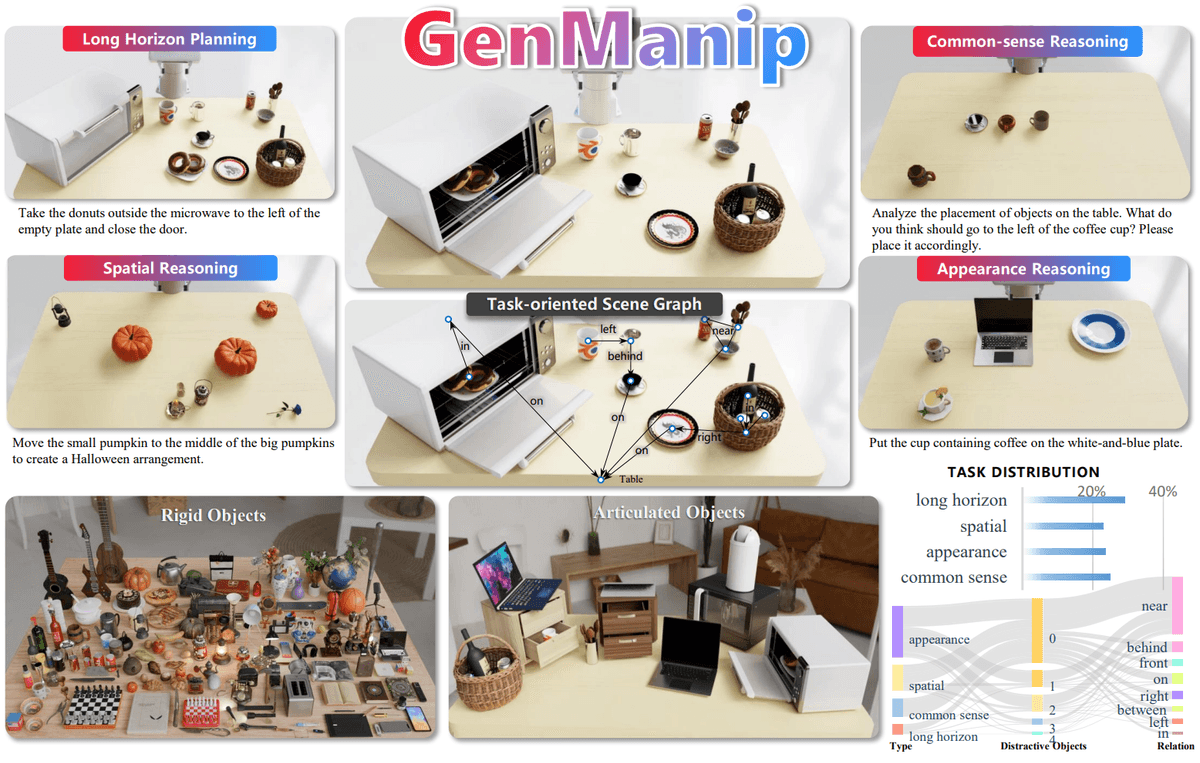

Robotic manipulation in real-world settings remains challenging, especially regarding robust generalization. Existing simulation platforms lack sufficient support for exploring how policies adapt to varied instructions and scenarios. Thus, they lag behind the growing interest in instruction-following foundation models like LLMs, whose adaptability is crucial yet remains underexplored in fair comparisons. To bridge this gap, we introduce $\textbf{GenManip}$, a realistic tabletop simulation platform tailored for policy generalization studies. It features an automatic pipeline via GPT-driven $\textit{task-oriented scene graph}$ to synthesize large-scale, diverse tasks using 10K annotated 3D object assets. To systematically assess generalization, we present $\textbf{GenManip-Bench}$, a benchmark of 200 scenarios refined via human-in-the-loop corrections. We evaluate two policy types: (1) modular manipulation systems integrating foundation models for perception, reasoning, and planning, and (2) end-to-end policies trained through scalable data collection. Results show that while data scaling benefits end-to-end methods, modular systems enhanced with foundation models generalize more effectively across diverse scenarios. We anticipate this platform to facilitate critical insights for advancing policy generalization in realistic conditions.

Video

Citation

If you use GenManip in your research, please cite our paper:

@inproceedings{gao2025genmanip, title={GENMANIP: LLM-driven Simulation for Generalizable Instruction-Following Manipulation}, author={Gao, Ning and Chen, Yilun and Yang, Shuai and Chen, Xinyi and Tian, Yang and Li, Hao and Huang, Haifeng and Wang, Hanqing and Wang, Tai and Pang, Jiangmiao}, booktitle={Proceedings of the Computer Vision and Pattern Recognition Conference}, pages={12187--12198}, year={2025}}